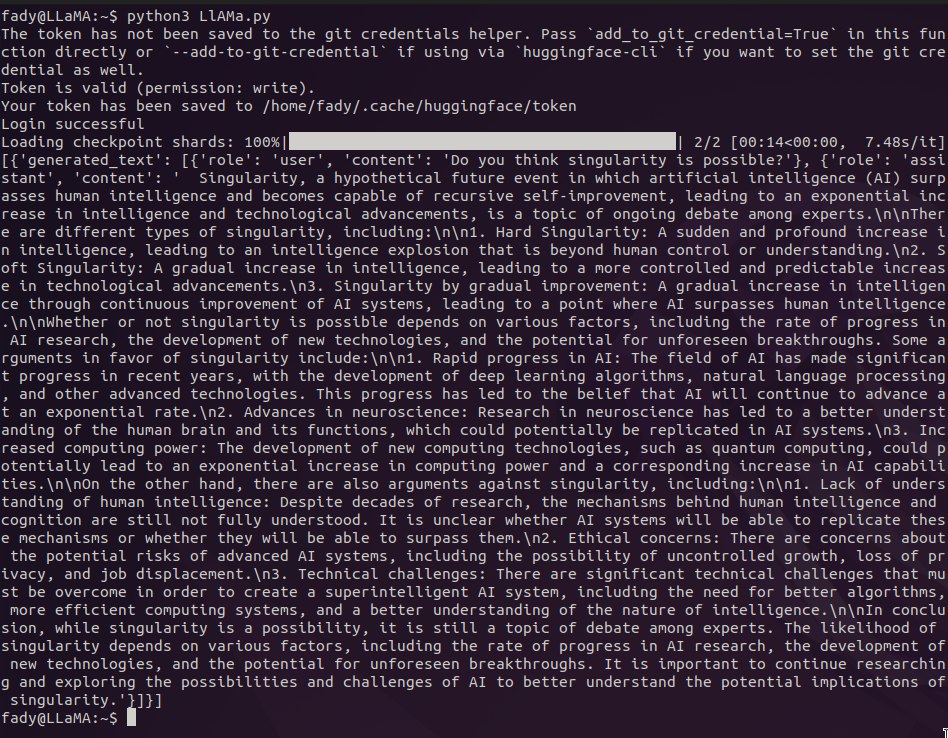

Something special about running an open source LLM model locally. Few years ago, one would be too excited for merely calling GPT’s API using free credits after months of being on a waiting list just to get access. Now, I get to run such models locally as shown below, with no subsciption and with no waiting. Isn’t this truely exciting?

Leave a Reply