As promised in my previous howto articles, we are going to build an interactive AI assistant based on what we had learnt so far. In this article we are going to learn how to build an AI assistant using Raspberry Pi and make it interactive using Watson Assistant while giving it a voice using Azure Speech.

In order to follow through this article you will need to set up your Raspberry Pi as described here. You will also need to configure Azure Speech as detailed on this article.

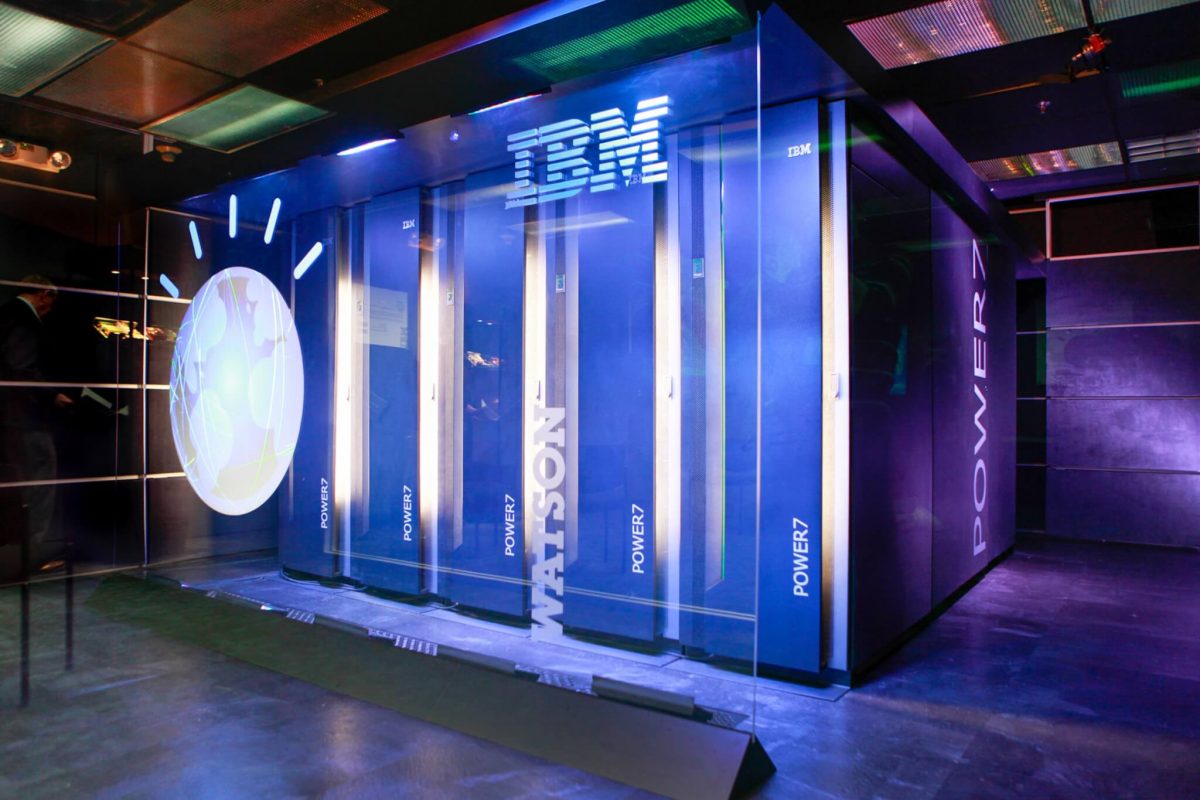

We are going to use Watson Assistant from IBM Cloud. Azure do have a bot service, however I found Watson more easier to learn not to mention it’s fun to use a Raspberry Pi in a multi cloud application.

You will need to register for an IBM cloud, which you can do for free with only your mail under 5 minutes from here. After registering you will get some decent free credit so you can create a Watson Assistant as detailed on this documenation. It’s really exciting the kind of free stuff you can get as a developer these days.

After setting up Watson Assistant, make sure that you added the dialog skill then create an intent as detailed here. In my example below I created a simple intent called abilities which would be triggered by asking the assistant what can it do.

When Watson is ready then it’s time to test it. Copy your assistant id, api key and endpoint in place of the placeholders in the below nodejs script.

const AssistantV2 = require('ibm-watson/assistant/v2');

const { IamAuthenticator } = require('ibm-watson/auth');

var sessionid;

var assistantid = '<id>'

const assistant = new AssistantV2({

version: '2020-04-01',

authenticator: new IamAuthenticator({

apikey: '<apikey>', //place your api key here

}),

url: '<endpoint>', //place your endpoint url here

});

//first create an assistant session to be used later

assistant.createSession({

assistantId: assistantid

})

.then(res => {

console.log(JSON.stringify(res.result, null, 2));

sessionid = res.result["session_id"];

sendMessage("hello"); //when session is ready send a hello message

})

.catch(err => {

console.log(err);

});

function sendMessage(message)

{

assistant.message({

assistantId: assistantid,

sessionId: sessionid,

input: {

'message_type': 'text',

'text': message

}

})

.then(res => {

return res.result.output.generic[0]["text"];

})

.catch(err => {

console.log(err);

});

}

In order to run this script you will need first to install the required npm package as following

npm install ibm-watson@^5.6.0

Save the script to something like chat.js and then execute by typing the below command

node chat.js

You should get a json response with the session id and whatever response you had configured Watson to respond to a generic greeting. Which means Watson Assistant service is ready.

Now it’s time put things together. You will now use Azure Speech to translate speech to text, send it over to Watson Assistant IBM cloud service, get the text response back then convert it to human like speech using Azure Speech again.

In order to do so, use the below code snippet and replace the placeholders with Azure Speech and Watson Assistant details as per the comments in the below code.

// Import required npm packages

const AudioRecorder = require('node-audiorecorder');

const fs = require('fs');

var sdk = require("microsoft-cognitiveservices-speech-sdk");

var player = require('play-sound')(opts = {})

var subscriptionKey = "<key>"; //replace with your azure speech service key

var serviceRegion = "northeurope"; // e.g., "westus"

var filename = "message.wav"; // 16000 Hz, Mono

const AssistantV2 = require('ibm-watson/assistant/v2');

const { IamAuthenticator } = require('ibm-watson/auth');

var sessionid;

var assistantid = '<id>'//replace with assistant id

const assistant = new AssistantV2({

version: '2020-04-01',

authenticator: new IamAuthenticator({

apikey: '<key>', //replace with watson api key

}),

url: '<url>', //replace with watson endpoint url

});

assistant.createSession({

assistantId: assistantid

})

.then(res => {

console.log(JSON.stringify(res.result, null, 2));

sessionid = res.result["session_id"];

})

.catch(err => {

console.log(err);

});

// Create an instance.

const audioRecorder = new AudioRecorder({

program: process.platform = 'sox',

}, console);

// Create write stream.

const fileStream = fs.createWriteStream(filename, { encoding: 'binary' });

// Start and write to the file.

audioRecorder.start().stream().pipe(fileStream);

setTimeout(recognize, 5000); //wait for 5 seconds then call recognize function

function recognize()

{

audioRecorder.stop();

// create the push stream we need for the speech sdk.

var pushStream = sdk.AudioInputStream.createPushStream();

// open the file and push it to the push stream.

fs.createReadStream(filename).on('data', function(arrayBuffer) {

pushStream.write(arrayBuffer.slice());

}).on('end', function() {

pushStream.close();

});

// we are done with the setup

console.log("Now recognizing from: " + filename);

// now create the audio-config pointing to our stream and

// the speech config specifying the language.

var audioConfig = sdk.AudioConfig.fromStreamInput(pushStream);

var speechConfig = sdk.SpeechConfig.fromSubscription(subscriptionKey, serviceRegion);

// setting the recognition language to English.

speechConfig.speechRecognitionLanguage = "en-US";

// create the speech recognizer.

var recognizer = new sdk.SpeechRecognizer(speechConfig, audioConfig);

// start the recognizer and wait for a result.

recognizer.recognizeOnceAsync(

function (result) {

console.log(result);

sendMessage(result["privText"]);

recognizer.close();

recognizer = undefined;

},

function (err) {

console.trace("err - " + err);

recognizer.close();

recognizer = undefined;

});

}

function sendMessage(message)

{

assistant.message({

assistantId: assistantid,

sessionId: sessionid,

input: {

'message_type': 'text',

'text': message

}

})

.then(res => {

say(res.result.output.generic[0]["text"]);

})

.catch(err => {

console.log(err);

});

}

function say(text){

// create the pull stream we need for the speech sdk.

var pullStream = sdk.AudioOutputStream.createPullStream();

// now create the audio-config pointing to our stream and

// the speech config specifying the language.

var speechConfig = sdk.SpeechConfig.fromSubscription(subscriptionKey, serviceRegion);

// setting the recognition language to English.

speechConfig.speechRecognitionLanguage = "en-US";

var audioConfig = sdk.AudioConfig.fromStreamOutput(pullStream);

// create the speech synthesizer.

var synthesizer = new sdk.SpeechSynthesizer(speechConfig, audioConfig);

synthesizer.speakTextAsync(

text,

function (result) {

console.log(result);

//when the wav file is ready, play it

player.play(filename, function(err){

if (err) throw err

})

synthesizer.close();

synthesizer = undefined;

},

function (err) {

console.trace("err - " + err);

synthesizer.close();

synthesizer = undefined;

},

filename);

}

When having both Azure Speech and Watson Assistant ready test first on your machine, you should get something similar as shown on the below video.

When everything is ready and tested, deploy the code to your Raspberry Pi using SFTP. You can use sftp shell command or something like FileZilla.

Deploy the nodejs script to your Raspberry Pi and name it something such as watson.js then test it by typing the below command.

node watson.js

Say hello to your new AI Assistant, you should hear it replying back to you confirming that you had successfully created your first multi cloud AIoT assistant.

Try playing around with Watson Intents and experiment with its features to learn more about its capabilities. Hope you found this article helpful and stay tuned for my future articles by following me at @fadyanwar

Leave a Reply